Our commitment to a safer digital space

Our highest priority is ensuring a safe and reliable experience for the visitors, creators and businesses worldwide who trust our platform. To this end, we have invested significantly in both people and technology to swiftly identify and remove harmful content.

Our Trust & Safety team accomplishes this through proactive and reactive reporting workflows.

Our internal tooling and workflows identify and flag high-risk content, which is then automatically removed or sent to our moderation team for manual review.

Our website forms (Violation Report Form and IP Infringement Report Form) and mobile app enable anyone to easily report any content they come across that they believe is in breach of our Terms & Conditions or Community Standards.

Any requests we receive from law enforcement or government bodies are reviewed and promptly actioned when required.

Team structure

With a global team reviewing content and responding to community violation reports 24/7, we can address issues efficiently and remove harmful content as quickly as possible – with multiple escalation pathways within the moderation team, and between full-time Trust & Safety managers at Linktree.

All team members have access to regular and comprehensive training, monthly quality assurance checks, and regular meetings with their team leaders. Additionally, due to the nature of the content reviewed, we provide access to mental health support to protect our team’s wellbeing while they protect the Linktree community.

Content moderation actions

(Global & EU)

Automated link removals

Manual vs hybrid content moderation

These removals are associated with flagged items that were reviewed by the team and determined to be unsafe, illegal or in violation of our Community Standards.

Linktree Suspensions

These removals are associated with flagged items that were reviewed by the team and determined to be unsafe, illegal or in violation of our Community Standards.

Profile Suspensions

Banned accounts: Top violative categories

Number of Bans

Community

violation reports

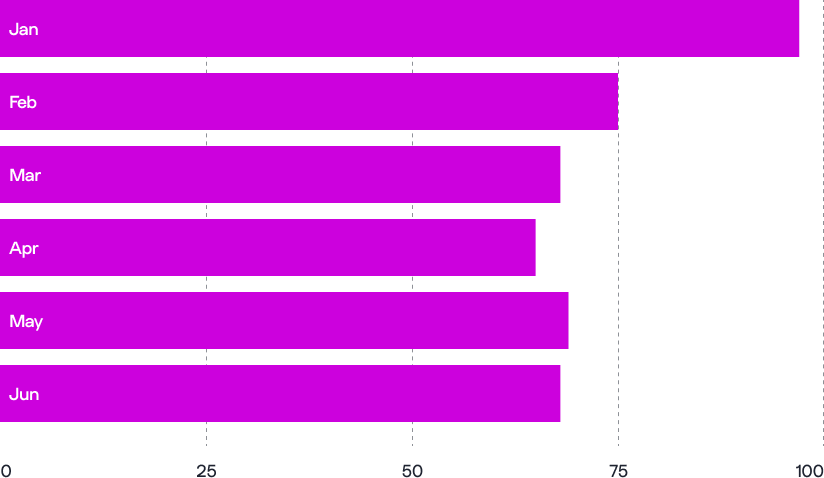

Number of violation reports received

During the Jan-June period represented in this report, we received 9,668 violation reports, averaging 1,611 per month.

Community Violation Reports

Please note that the category indicates what the reporter selected when submitting the report, which does not correlate with or guarantee moderation action.

This graph shows the ratio of reports where there was no confirmed violation, compared to reports that did result in a moderation action.

Overall, this shows that on average only 18.26% of submissions reported during this time were in breach of our Community Standards.

Violation Reports: Rejected vs Accepted

This data reflects the difference in time (seconds) between when the violation report was submitted and when it was closed out by the moderator, including review time and moderation action.

Median Time in Queue (Seconds)

Appeals may be submitted for almost any moderation action taken on an account, such as an account ban or link removal. We received a total of 4,947 during this period.

Volume of Appeals

This is the ratio of appeals that were rejected (i.e. where the ban stayed in place) vs. appeals that were accepted (i.e. decision overturned and account/content restored).

On average, 20.48% of appeals during this time were accepted.

Appeals: Rejected vs Accepted

This calculates the difference in time (seconds) between the appeal being submitted and the decision being made by the moderator, including review time and any reversal of moderation action.

Median Time in Queue (Seconds)

Intellectual property reports

When we receive an intellectual property report, it is manually reviewed to determine whether the report is valid and if the reported Linktree contains infringing content. If it does, then the content is removed and, if appropriate, the Linktree is suspended.

More on our intellectual property policy

Here’s a breakdown of the global volume of IP reports we received during the data period.

Trademark and Copyright

Trademark Reports

Copyright Reports

Median Response Time (Hours)

Legal requests

The table below provides a breakdown of all legal information requests received during the reporting period. Please note that subpoenas are only actioned when accompanied by the appropriate and official legal documentation.

Linktree has not received any reports from Trusted Flaggers, as defined by the Digital Services Act, during the data period. We remain committed to closely monitoring activity and maintaining an open channel of communication with our Trusted Flaggers to ensure swift action when necessary.

Monthly

active users

Between 1 January 2025 and 30 June 2025, the average number of monthly active recipients was below the 45 million user threshold for being designated as a VLOP.

We define a monthly active recipient as Linkers and visitors who visit our platform and interact with a profile at least once, for example clicking a link, during the calculation period. We have also attempted to limit this number to “unique” visits only e.g. counting multiple visits by the same user as only once in each month.

We will continue to monitor the number of average monthly active recipients and publish updated information in accordance with Article 24(2) of the DSA.